Do you need a data warehousing Azure FinOps solution? Have a lot of billing data and have been tasked with building a FinOps solution to process it?

Today we’ll take a look at how to design a solution to process your Azure billing data at scale and turn it into beautiful FinOps reports for your organization. We’ll look behind the scenes at the technology that powers CloudMonitor and share some of our learnings from the past 3 years to help accelerate your development.

Problem Statement

- Cost/Billing data in Azure can be large. An Azure customer with a $30 million annual Azure bill will be generating around 6TB of data per month, which is around 200 GB per day.

- You want to do things like Cost Anomaly Detection, Forecasting, Budgeting, Tag Normalization and another 101 cool FinOpsy things.

- Your executives, finance team and business users want to subscribe to periodic reports and see KPIs and fancy charts to make sure your cloud spend is producing great ROI.

- Your technical team and business users want to do self-service reporting without the need for IT to map business units to technical resources and spend 3 days generating reports for the business.

- You want to break down costs into unit economics to understand the true business value of your cloud spend.

- Store 5-8 years of historical cost data without breaking the bank.

High-Level Solution

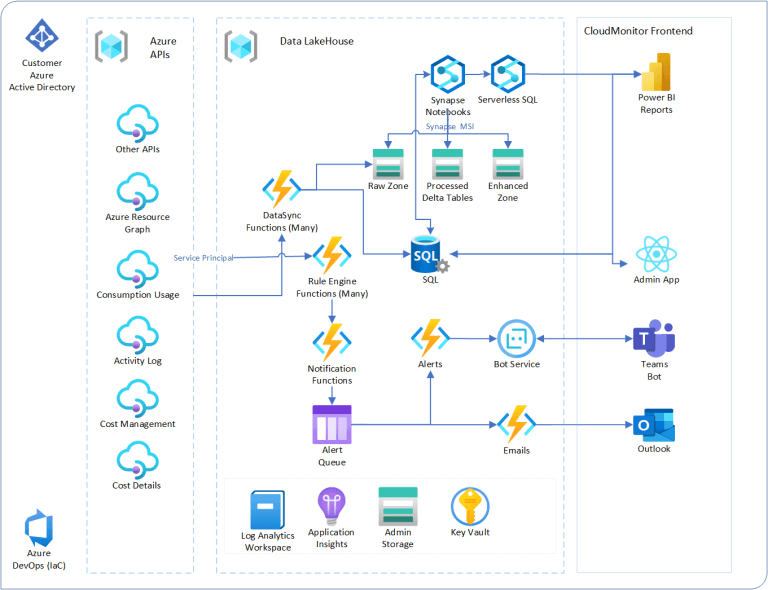

A Big Data problem requires a Big Data Solution. Our favorite is the modern data platform, or Data Lakehouse as Databricks calls it. All the benefits of a Data Warehouse but without the cost and issues of traditional data warehousing. The CloudMonitor solution costs around $200 USD per month to operate for a customer with a $10 million spend. If you are spending more than this on a Big Data solution, then this post is for you.

Let’s start with the technology choices. SQL is not going to cut it here. Too expensive and it won’t scale. Spark is a great choice here and you have a lot of options on Azure:

- Hosted Spark on a VM (IaaS)

- HD Insights (IaaS/PaaS)

- Databricks (PaaS)

- Synapse (PaaS)

- Fabric (SaaS)

We’ve picked number 4 due to the ease of integration with Power BI and the Azure ecosystem, but really any spark engine will do! Fabric really is a game changer but one of our constraints when building CloudMonitor was the ability to deploy the Datalake as a Service as well as running it a super low cost. Fabric (SaaS) does not satisfy these constraints. Databricks offers way more cluster sizing options and Databricks spark is heavily optimized, however, there are a few more steps to jump through in Azure so we’ll stick to Synapse Pipelines and SQL Serverless for now.

Stay tuned for Part 2 and check out the finished product here.

Rodney Joyce

- 5 Essential Things Every FinOps Team Needs - July 23, 2024

- 5 Best FinOps Principles and How to Apply Them in a Software Development Circle - July 16, 2024

- Automating FinOps: Tips and Best Practices - July 9, 2024